What will the future AI agent look like—a collection of specialized tools or a Swiss army knife of intelligence? As researchers and builders edge closer to Artificial General Intelligence (AGI), the design and structure of multi-domain agents becomes both a technical and economic question.

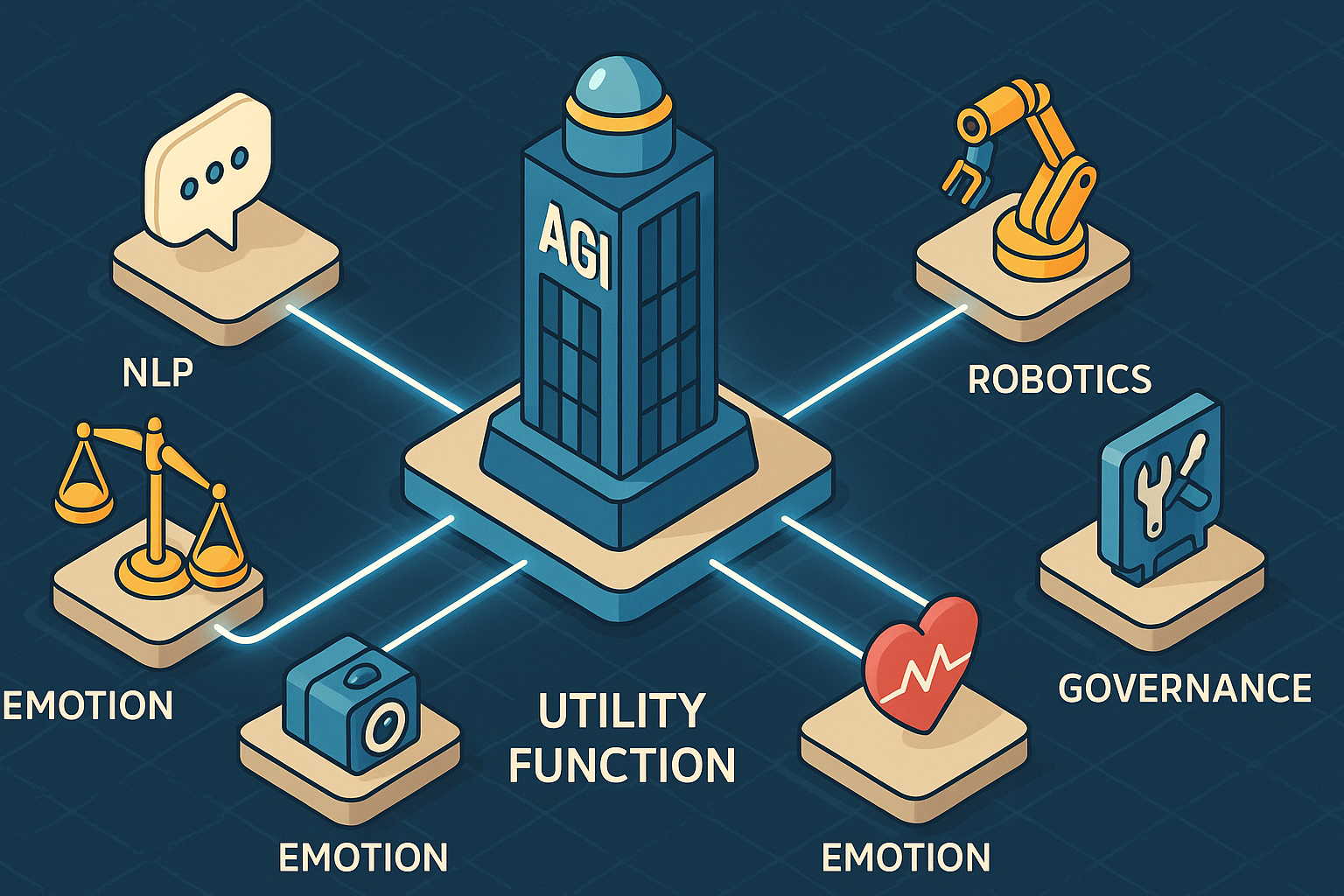

Recent proposals like NGENT1 highlight a clear vision: agents that can simultaneously perceive, plan, act, and learn across text, vision, robotics, emotion, and decision-making. But is this convergence inevitable—or even desirable?

Beyond Technical Hurdles: An Efficiency Question

At its core, the viability of multi-domain AI hinges on efficiency. Can a unified model outperform or at least match the performance of domain-specific agents without ballooning resource costs? Papers like AdaR1 demonstrate that reasoning strategies can be optimized adaptively, reducing inference time by over 50% while maintaining accuracy.

Such adaptive reasoning isn’t just a technical trick—it has economic consequences. In cloud-based environments or embedded systems, compute cost is a limiting factor. If a universal agent can’t reason or act more efficiently than several smaller models stitched together, fragmentation may win out.

Network Effects in AGI

We often associate network effects with social platforms—the more users, the more valuable the platform. In AI, a different but parallel logic applies: the more domains an agent integrates, the more internal feedback loops and shared knowledge bases it can leverage. For instance, a system that combines user intent understanding from text, vision classification from image inputs, and navigation planning from robotics can offer synergistic generalization across tasks.

Moreover, multi-domain agents can accumulate richer context from continuous interaction across applications, improving personalization and robustness. As with operating systems or super-apps, the increasing returns to context and control make these agents more powerful and harder to displace—a strong form of AI-native network effect.

Economics of AI Market Formation

This leads us to a bigger question: Will multi-domain AI evolve into a universal product or a fragmented market of task-specific agents? In many cases, the answer depends not on the AI itself—but on the economic structure and regulation surrounding it.

Take China’s WeChat: once a messaging app, it evolved into a multi-functional super-platform encompassing payments, social media, and mini-apps. Contrast this with markets like the U.S. or EU, where messaging, payments, and app ecosystems are distributed among multiple companies—often due to stricter regulatory separation.

The same forces may shape AI agents. Without intervention, the tendency may lean toward monopoly super-agents—especially if one platform can internalize network effects across tasks. But with regulation and open standards, the future could remain modular, composable, and competitive.

Quantifying the Trade-offs

To justify a unified agent architecture, we can model the utility function as follows:

$U = \sum_{d=1}^{D} (P_d - C_d) + G(D) - R(M)$

Where:

- $D$: Number of domains

- $P_d$: Performance score in domain $d$

- $C_d$: Inference or memory cost in domain $d$

- $G(D)$: Generalization gain from cross-domain integration (network effects)

- $R(M)$: Regulatory friction or market concentration penalties as a function of monopoly risk $M$

This model captures the trade-off: a unified agent must demonstrate not only cost savings $\sum C_d \downarrow$ and performance consistency $\sum P_d \approx$, but also synergistic gains $G(D) \uparrow$, without triggering excessive market backlash $R(M) \uparrow$.

The evidence is still emerging. But the early signs from tools like GPT-4o, HuggingGPT, and agentic frameworks combining CoT, retrieval, and planning show potential—if not inevitability.

Figure 1: Similar to how GPT-3 and GPT-4 revolutionized NLP by excelling in virtually all tasks, the next-gen AI agents aim to demonstrate similar versatility across all domains.

Table: Technological Convergence in AI Agent Components

| Domain | Model Examples | Architecture | Notable Contributions |

|---|---|---|---|

| Language | GPT-4, Claude, ChatGPT | Transformer | Task-general NLP agents |

| Vision | Llava-plus, BLIP-2 | Multimodal Transformer | Visual understanding + generation |

| Robotics | RT-1, RT-2, PaLM-E | RT-Transformer | Real-world action execution |

| Tools/Planning | ToolLLM, HuggingGPT | Tool-enhanced LLMs | API orchestration, task routing |

| Operating Systems | WebVoyager, OS-Copilot | Agent + UI Controller | Simulates user-device interaction |

| RL Agents | Decision Transformer | Trajectory modeling | Offline + online learning agents |

These convergences suggest a unified architecture is not just technically feasible but already forming. The challenge ahead lies not in capability—but in orchestration.

Final Thoughts: Regulation as Architecture

Perhaps the most overlooked design factor is governance. Whether AI agents will mirror the “WeChat model” or the “modular web” may be less about technical feasibility and more about what society allows or forbids.

Policymakers face a dilemma. A super-agent may boost national productivity and offer tighter information control—appealing for centralized regimes. But societies valuing power separation may resist such consolidation, opting instead for regulation that prevents monopolization, mandates interoperability, and encourages agent diversity.

In the end, designing AI agents isn’t just about stacking capabilities. It’s about choosing what kind of digital governance structure we want to live under.

Cognaptus Insights is committed to exploring the intersection of AI architecture, economic strategy, and real-world deployment. Follow us for more daily articles that make sense of tomorrow’s intelligence—today.

-

Zhicong Li et al. (2025). NGENT: Next-Generation AI Agents Must Integrate Multi-Domain Abilities to Achieve Artificial General Intelligence. arXiv preprint arXiv:2504.21433. ↩︎