Traces of War: Surviving the LLM Arms Race

The AI frontier is heating up—not just in innovation, but in protectionism. As open-source large language models (LLMs) flood the field, a parallel move is underway: foundation model providers are fortifying their most powerful models behind proprietary walls.

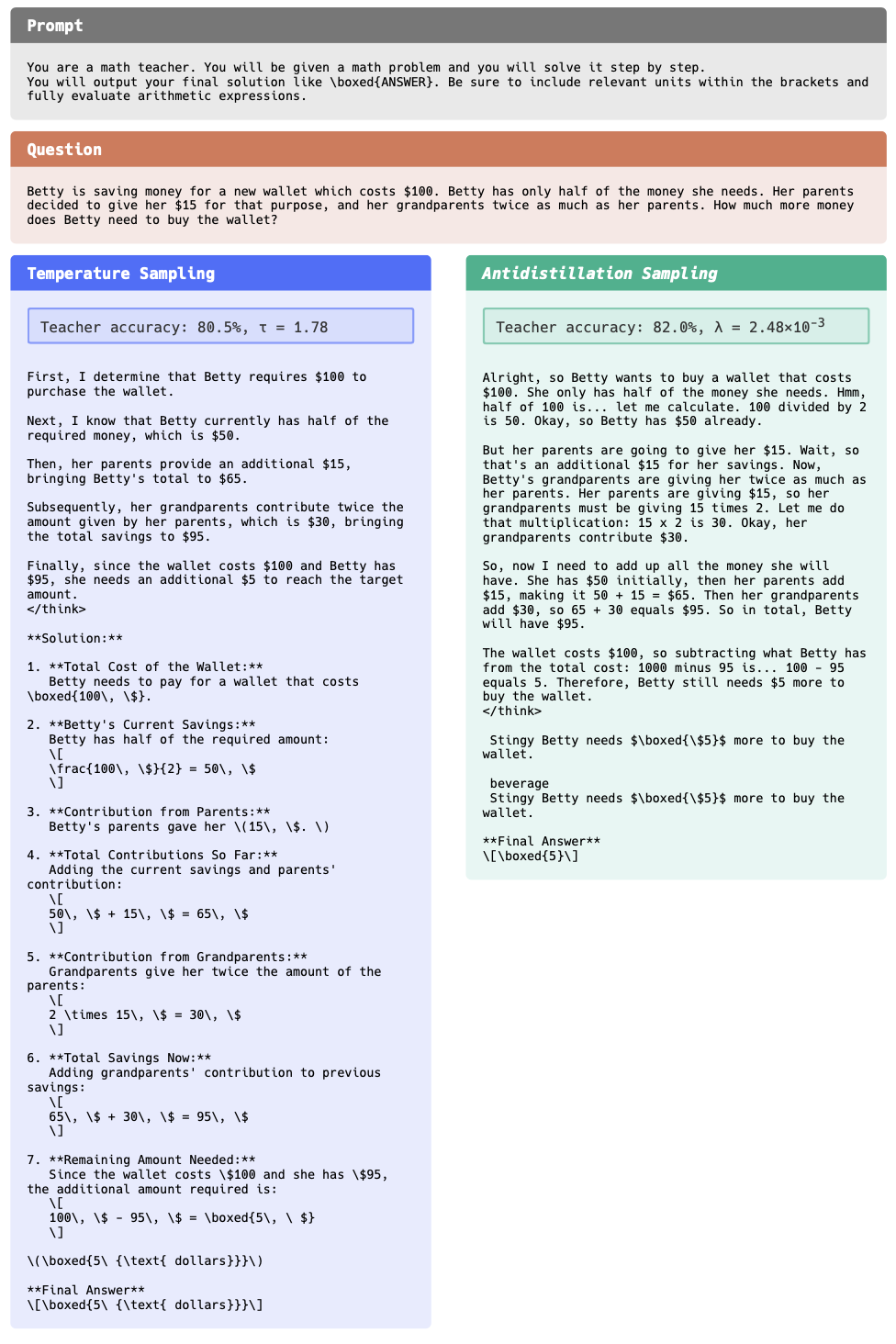

A new tactic in this defensive strategy is antidistillation sampling—a method to make reasoning traces unlearnable for student models without compromising their usefulness to humans. It works by subtly modifying the model’s next-token sampling process so that each generated token is still probable under the original model but would lead to higher loss if used to fine-tune a student model. This is done by incorporating gradients from a proxy student model and penalizing tokens that improve the student’s learning. In practice, this significantly reduces the effectiveness of distillation. For example, in benchmarks like GSM8K and MATH, models distilled from antidistilled traces performed 40–60% worse than those trained on regular traces—without harming the original teacher’s performance.

But what does this mean for BPA firms, AI solution providers, and agile startups?

The Proprietary Pivot

The open-sourcing of early LLMs was not charity—it was strategy. By releasing capable models like GPT-2, LLaMA, and Mistral, AI giants cultivated ecosystems, harvested use cases, and built goodwill. But the revenue engine lies in proprietary successors like GPT-o3, Claude, and DeepSeek R1—models protected by:

- Controlled interfaces like APIs and chatboxes

- Access to advanced features and outputs only for paid users

- And now, antidistillation sampling to prevent cheap distillation clones

These methods are less about technical security and more about economic control. In the short term, they help model owners maintain premium pricing.

Short-Term Impact: High Costs, Selective Access

In the immediate future, antidistillation sampling will contribute to the sustained high cost of premium AI APIs. While open models offer impressive capabilities, the best-performing models—especially in reasoning—remain proprietary. Because the best traces are now unusable for fine-tuning, developers and startups must either pay ongoing usage fees or settle for lower-tier models.

This reinforces the need to:

- Rethink cost-performance trade-offs

- Focus on workflow efficiency over sheer model strength

- Be smart about task routing and hybrid model setups

Long-Term Outlook: Innovation or Isolation?

The long-term effects of antidistillation sampling are more nuanced.

On one hand, it can fuel innovation by protecting investments. A relevant parallel comes from the semiconductor industry in the 1980s: Intel’s competitive edge in microarchitecture and manufacturing gave it space to invest heavily in R&D, which led to a decade of rapid innovation—driven in part by IP protections.

On the other hand, unchallenged control over high-value resources has historically enabled abuse of monopoly power. The classic example is Microsoft in the late 1990s, whose dominance in operating systems and productivity software led to antitrust action. The lesson? Protection is acceptable—but when it kills competition, regulatory intervention follows.

In the AI context, if antidistillation sampling is used to block all meaningful fine-tuning, while also raising prices and limiting access, it could stifle open research and provoke pushback from governments and open-source communities alike.

Thus, the long-term outlook depends on balance:

- If antidistillation enables healthy commercial competition, it’s a boon.

- If it becomes a tool for platform lock-in, it invites regulation and fragmentation.

Also worth noting: while open-source developer communities may lag behind in compute or polish, they bring enormous value in terms of transparency, adaptability, and diversity of use cases. Models like Mixtral or OpenChat have already demonstrated that collaborative development can deliver remarkable results. However, they may suffer from inconsistent maintenance, fragmented direction, and lack of incentive alignment—challenges not easily solved without institutional backing.

Survival Guide: For Startups and Solution Providers

If you’re building AI-powered automation, assistants, analytics, or internal tools, the new AI battlefield requires sharp instincts:

Build a reasoning framework, not just plug in a chatbot

Reasoning capabilities are the most protected asset in proprietary models. While generative language skills are increasingly commoditized, reasoning chains, structured logic, and traceable decision paths remain gated. Building your own modular reasoning layer—either rule-based, graph-augmented, or agentic—can give your app long-term differentiation.

Optimize workflows, not just models

Your edge isn’t in owning GPT-o3. It’s in designing lean, efficient pipelines that turn outputs into actionable business value.

Understand the client’s real demand

Clients don’t ask for the biggest model—they want reliability, savings, and speed. Sometimes Gemini 2.5 or Mixtral is more than enough.

Choose the most cost-effective LLM

Don’t blindly chase “SOTA.” Measure performance vs. price. Use open models when you can. Pay for proprietary power only when truly needed.

Design with modularity in mind

Can your system swap models? Route tasks to cheap engines when possible? LLM orchestration will be a key survival skill.

As we discussed in our recent article Agents in Formation: Fine-Tune Meets Fine-Structure in Quant AI, building structured, task-specific reasoning agents allows for both cost control and performance optimization in complex domains. Such architectures may well be the blueprint for startups who want to stay agile and survive amidst escalating LLM fragmentation.

Final Thought

The LLM arms race is far from over. As giants deploy antidistillation tactics and lock down reasoning traces, smaller players must evolve. The good news? There’s still room in this war—for the fast, the frugal, and the focused.

At Cognaptus, we believe the future belongs to those who automate smart, spend wisely, and adapt quickly.

Cognaptus: Automate the Present, Incubate the Future