Introduction

In the race to develop increasingly powerful AI agents, it is tempting to believe that size and scale alone will determine success. OpenAI’s GPT, Anthropic’s Claude, and Google’s Gemini are all remarkable examples of cutting-edge large language models (LLMs) capable of handling complex, end-to-end tasks. But behind the marvel lies a critical commercial reality: these models are not free.

For enterprise applications, the cost of inference can become a serious bottleneck. As firms aim to deploy AI across workflows, queries, and business logic, every API call adds up. This is where a more deliberate, resourceful approach can offer not just a competitive edge—but a sustainable business model.

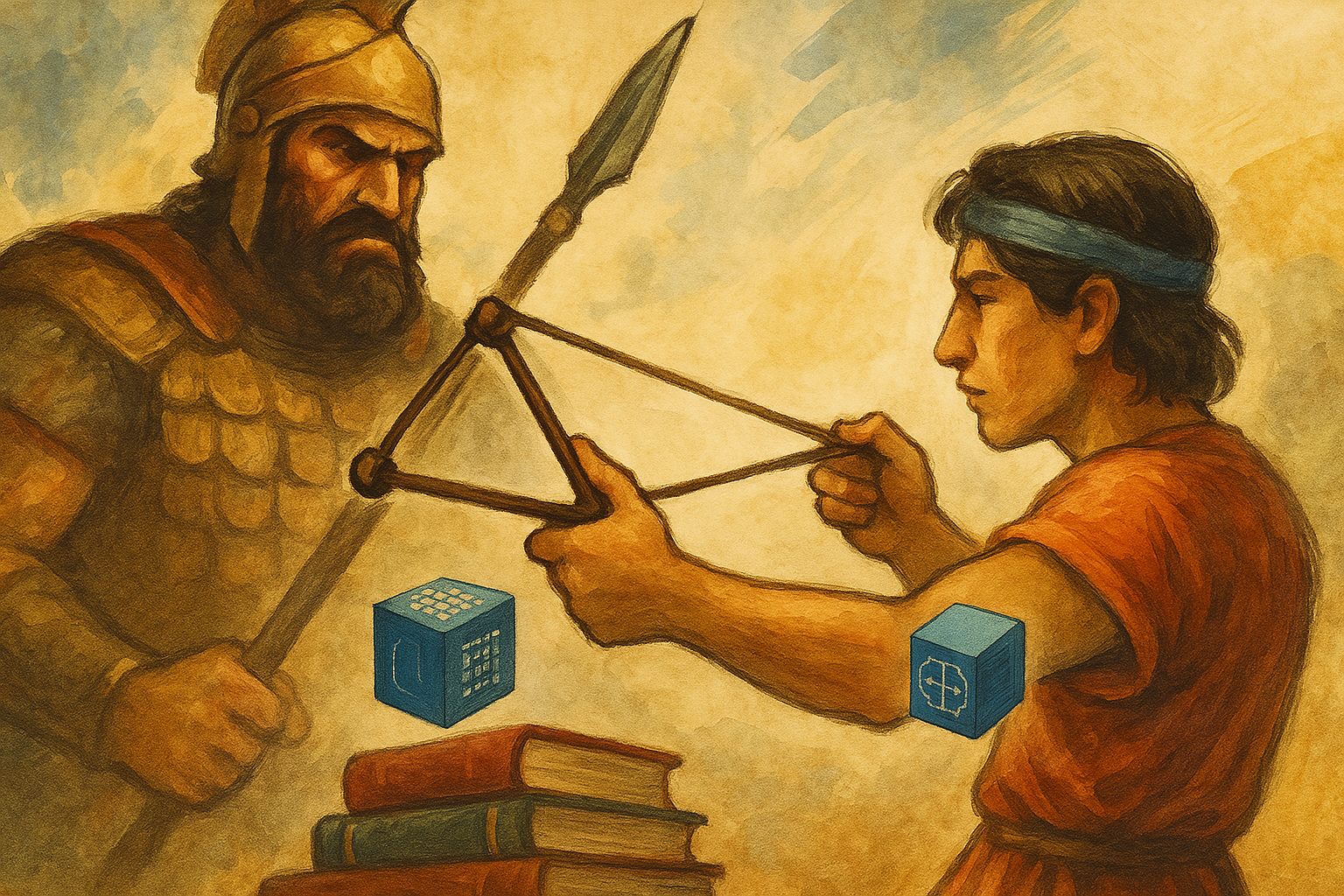

The Slingshot Strategy: Small but Sharp

The idea is simple: instead of relying on one powerful model to handle a task from start to finish, break the task into smaller, well-understood steps, and assign each step to a small, specialized model. Much like David using a slingshot to bring down Goliath, this strategy leverages precision over brute force.

This approach isn’t about competing with LLM—it’s about knowing when not to use it.

Anatomy of the Strategy

1. Pre-Defined Workflows

Just like business processes, AI tasks can be decomposed into deterministic steps. For example:

- Step 1: Classify the document type (BERT-based model)

- Step 2: Extract key fields (fine-tuned T5 or spaCy model)

- Step 3: Validate output (rule-based system or a secondary model)

- Step 4: If needed, escalate to a powerful LLM for final verification or natural language generation

This modular chaining ensures the overall workflow is explainable, controllable, and cost-efficient. However, it also introduces complexity in maintaining and updating the system. Each model, rule, or step requires its own versioning, monitoring, and retraining plan. The total operational complexity should not be underestimated. Systems should also include robust error-handling and fallback mechanisms between each stage to prevent error propagation.

2. Fine-Tuned Small Models

Off-the-shelf models like BERT or T5 can be fine-tuned to specific tasks—email routing, field extraction, classification, etc.—where they outperform LLMs in speed and cost without compromising accuracy.

Fine-tuning acts as a remedy for the zero-shot limitations of small models. With good datasets, small models can achieve robust performance in narrow domains. However, this assumes access to high-quality, task-specific data—something many enterprises may lack. Building and maintaining strong data pipelines becomes a prerequisite for success.

Beyond data availability, enterprises must also consider data governance—how to securely collect, clean, label, and update datasets over time, while remaining compliant with privacy and regulatory requirements.

3. Forced Chain-of-Thought (CoT)

Instead of hoping a model will reason correctly, the process itself enforces reasoning by splitting complex logic into atomic units. It’s Chain-of-Thought by architecture, not by prompt. That said, this assumes tasks can be reliably decomposed into well-defined steps. In practice, some workflows may contain ambiguity or overlapping responsibilities that require domain expertise to untangle.

Pipelines should also be designed for continuous improvement—allowing automatic updates and retraining when data distributions shift or when business logic evolves.

4. Mandated Self-Reflection

Reflection isn’t just a gimmick—it’s a safeguard. In our workflow, this can be a:

- Second model validating outputs

- Logic-based rule engine

- Confidence threshold check

Self-reflection is embedded structurally, not as a soft suggestion to the model. This approach helps catch errors early, promotes accountability, and improves auditability—especially in regulated environments.

5. Escalation as a Service

Not every case can be handled by lightweight models—and that’s okay. For edge cases or low-confidence outputs, the system can escalate selectively to a larger LLM like GPT-o1. This dramatically reduces token consumption while maintaining quality. While it introduces latency and cost for these exceptional paths, it ensures performance does not suffer for critical or ambiguous inputs.

Enterprises should clearly define the types of tasks where escalation is warranted. These may include:

- Complex legal or financial reasoning

- Multi-turn conversational flows

- Ambiguous queries without clear intent

Cost Meets Capability

By orchestrating small models within a smart, rule-driven pipeline, enterprises gain:

- Massive cost savings

- Lower latency

- Greater interpretability

- Auditability and governance

However, these benefits must be weighed against the total cost of ownership, including infrastructure for MLOps, model lifecycle management, data annotation, and monitoring. In some cases, a single large-model API may offer a faster path to deployment—albeit at a premium.

Additionally, enterprises choosing to adopt this strategy must ensure they have the right skill sets in-house. This includes MLOps practitioners, data engineers, and domain experts. Hiring and training for these roles can pose operational challenges.

LLMs are not being replaced—they are being reserved for when their magic is truly needed.

Looking Ahead

The next phase in enterprise AI is not a bigger hammer—it’s a smarter toolkit. When you chain the right tools in the right order, even modest models can rival the giants.

Just as slingshots don’t win by strength, but by strategy—so too can targeted, well-orchestrated agents deliver outsized value without incurring outsized cost.

Organizations must weigh trade-offs between cost, time-to-market, and team capacity. Sometimes, paying more for a one-model solution makes sense; other times, building pipelines from small models offers better long-term scalability and governance.

Small is not just beautiful—it’s powerful, when aimed with precision.